Intelligent Infant Monitoring System

Project IIMS is an initiative to develop an enhanced system for priority based distraction mechanisms to ensure maximum safety of infants

Existing infant monitoring devices, including nanny monitors,video monitoring cameras and detection of physiological signals of the infant are based upon human intervention for looking after their baby’s health or remotely monitoring in a timely or sporadic intervals of time. Current technology aimed at monitoring the baby is still not an automated approach and it does not employ effective distraction mechanisms for maximizing the safety of the infant. Our product inculcates a Machine to Machine (M2M) communication system in order to automatically detect and flag unsafe zones for infants and toddlers who tend to wander the house in the absence of adults. The system employs multiple threshold based distraction mechanisms in order to prevent the infant from heading towards the region deemed unsafe for the infant in the absence of adult supervision

Motivation

System

The system comprises of an intelligent low cost camera based movement detection algorithm which keeps track of proximity of the infant from identified static objects in space which seem unsafe for the infant. Once the proximal distance of the infant from all the unsafe static objects in space is calculated, various effective automated distraction mechanisms (with different priorities based on the distance of the infant from the unsafe objects) have been implemented; for instance, music system as the initial threshold, and RC car (second threshold) trying to distract the infant to move away from the unsafe static object. Our product also allows for an easy switching to remotely operated distraction mechanisms. The same automated systems can be controlled remotely by the parent or caretaker through a secure connection to deterministically distract the baby to a certain location or elicit certain behaviors.

Architecture

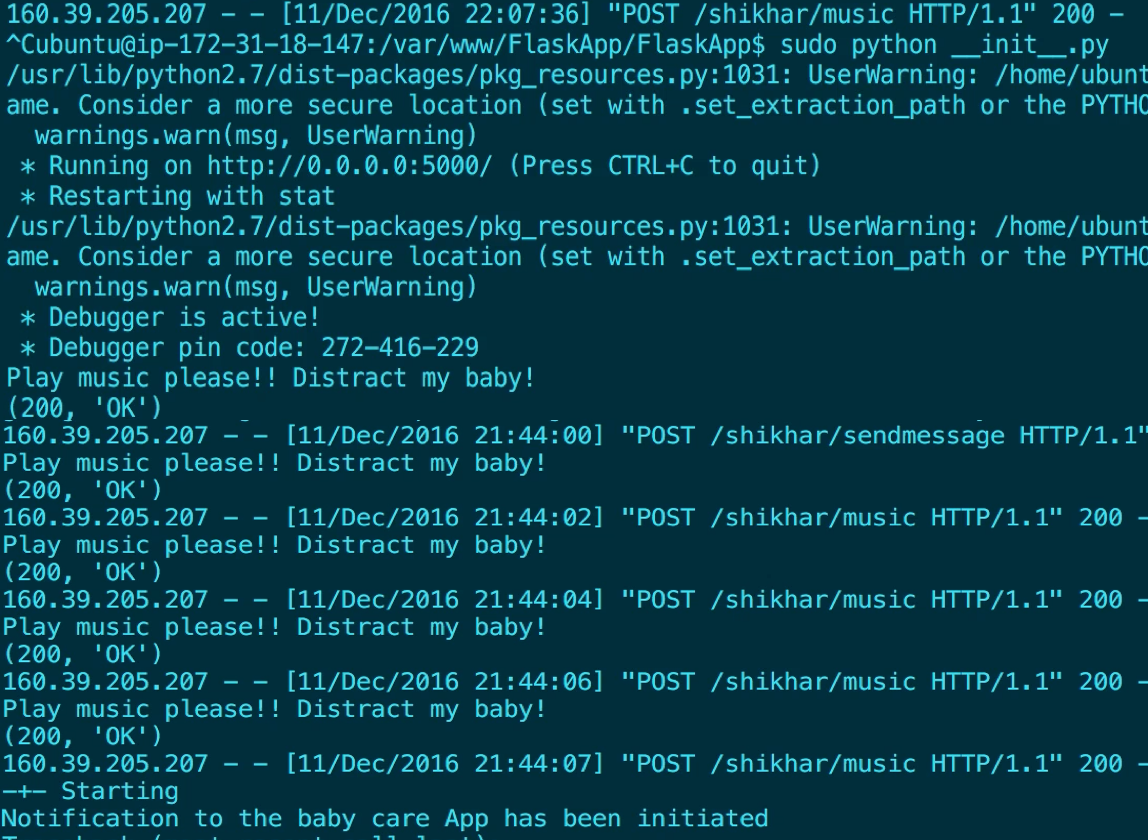

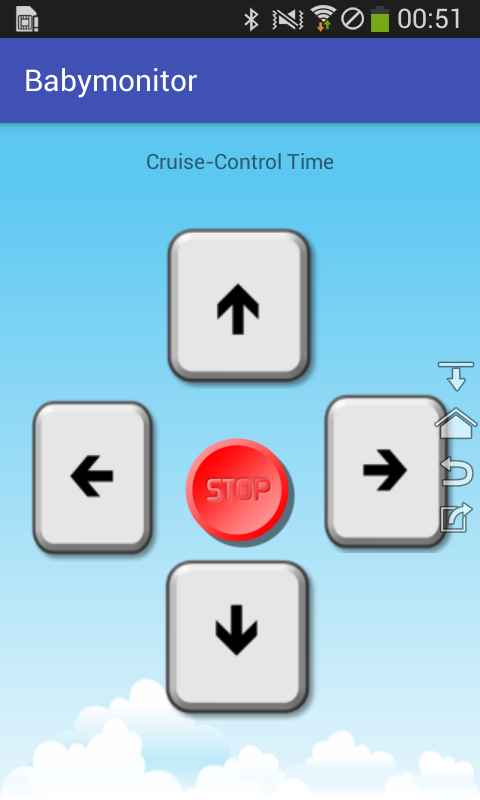

Smartphone App : Baby-care

Technical Components

The system comprises of the following low cost components and features:

- Huzzah - ESP8266

- Web camera

- Remote controlled Wireless car

- Music system

- AWS Amazon cloud Server

- Smartphone Application

Prototype

Smartphone application prototype

With the click of a button, the user can be redirected to a broadcasting streaming channel where the live feed of the infant can be viewed at any time

Parents can seamlessly control the remote controlled ESP8266 chip enabled car by communicating directly with the wifi chip using restful interface

Yet another smart app interface includes providing commands from the app to remotely control the internet enabled music system running on the server

References

[1] Xia, Lu, Chia-Chih Chen, and Jake K. Aggarwal. "Human detection using depth information by kinect." CVPR 2011 WORKSHOPS. IEEE, 2011.

[2] Rosten, Edward, and Tom Drummond. "Machine learning for high-speed corner detection." European conference on computer vision. Springer Berlin Heidelberg, 2006.

[3] Papageorgiou, Constantine P., Michael Oren, and Tomaso Poggio. "A general framework for object detection." Computer vision, 1998. sixth international conference on. IEEE, 1998.

[4] Dalal, Navneet, Bill Triggs, and Cordelia Schmid. "Human detection using oriented histograms of flow and appearance." European conference on computer vision. Springer Berlin Heidelberg, 2006.

[5] Matyunin, Sergey, et al. "Temporal filtering for depth maps generated by kinect depth camera." 3DTV Conference: The True Vision-Capture, Transmission and Display of 3D Video (3DTV-CON), 2011. IEEE, 2011.

[6] Chen, Lulu, Hong Wei, and James Ferryman. "A survey of human motion analysis using depth imagery." Pattern Recognition Letters 34.15 (2013): 1995-2006.

Our Team

Shreya Gautam

Electrical Engineering graduate from Columbia University with specialization in Signal processing and Wireless systems

Link: Shreya Gautam

Shikhar Kwatra

Electrical Engineering graduate from Columbia University with specialization in Hardware design and Embedded systems

Link: Shikhar Kwatra

Eashwar Rangarajan

Computer Engieering graduate from Columbia University with specialization in Embedded systems and Computer architecture

Link: Eashwar Rangarajan

Contact

Eashwar Rangarajan: er2768@columbia.edu

Shikhar Kwatra: sk4094@columbia.edu

Shreya Gautam: sg3319@columbia.edu

Other Contact Information: other contact info

Columbia University Department of Electrical Engineering

Class Website:

Columbia University EECS E4764 Fall '16 IoT

Instructor: Professor Xiaofan (Fred) Jiang